Artificial Intelligence (AI) is fast becoming a powerhouse for individuals and businesses, offering automation, data-led insights and boosted efficiency. With this huge opportunity, however, comes challenges and risks that need to be carefully considered. With AI uptake moving quicker than many expected, Responsible AI needs to match the pace.

Alphinity has been digging into the ethics of AI technologies for quite some time, considering the potential risks to companies, society and the environment. This curiosity led to an exciting collaboration with Australia’s premier national science research agency, Commonwealth Scientific and Industrial Research Organisation (CSIRO), in 2023. We co-developed a landmark Responsible AI Framework which was released in 2024. Now, after a year of use, we have some reflections to share. This article highlights AI use cases that we see companies adopting, some of the related ESG risks, and notable company engagements that were driven by applying the framework to our investments.

Spotlight: Responsible AI Framework

In May 2024, Alphinity and CSIRO released a Responsible AI Framework (RAI Framework) to assist both investors and companies navigate the flourishing AI opportunity. The framework is a practical, three-part toolkit that bridges the gap between emerging responsible AI considerations and existing ESG principles such as workforce, customer, data privacy and social license.

The framework is designed to set a standard in responsible AI and can be used flexibly depending on the investor’s scope and needs. It is also intended to help companies understand investor expectations around responsible AI implementation and disclosure.

The report and toolkit can be explored here: A Responsible AI Framework for Investors – Alphinity

Why should investors care about responsible AI today?

AI holds significant potential but also presents various environmental and social risks.

For instance, the reliance on data centres leads to increased greenhouse gas emissions, which may result in climate change-related risks, including carbon pricing. Additionally, their high water usage could need to be restricted during droughts, or be subject to future regulations as recently proposed in Europe.

The business stability of entities within the AI value chain could be adversely affected if these issues are not promptly identified and managed. These risks are prevalent throughout the AI value chain, from semiconductor producers to software providers, but are particularly significant in the short-term for hyperscalers such as Microsoft, Alphabet, and Amazon.

AI can help drive automation, supercharge productivity and assist with the performance of repetitive tasks. But what happens when workers are displaced, or when the AI tool hallucinates or malfunctions? Employees and unions could react, creating social tension and affecting customer service. Wider operational disruptions and/or cybersecurity issues are also possibilities.

A consideration in the healthcare industry is to balance the cost and timing benefits around clinical trials and product development, with the potential risks to data quality, bias and real-world validation of AI-lead drug discovery.

Within the banking sector, AI is being used to enhance customer service, fraud detection and credit decisions. These are able to combat crime, improve customer satisfaction and open access for the underbanked. However, being done poorly might invite regulatory scrutiny,

We believe that in order for AI opportunities to be realised, the governance, design, and application of the AI needs to be undertaken in a responsible way, considering any environmental, social, and evolving regulatory considerations of AI and mitigating these impacts wherever possible.

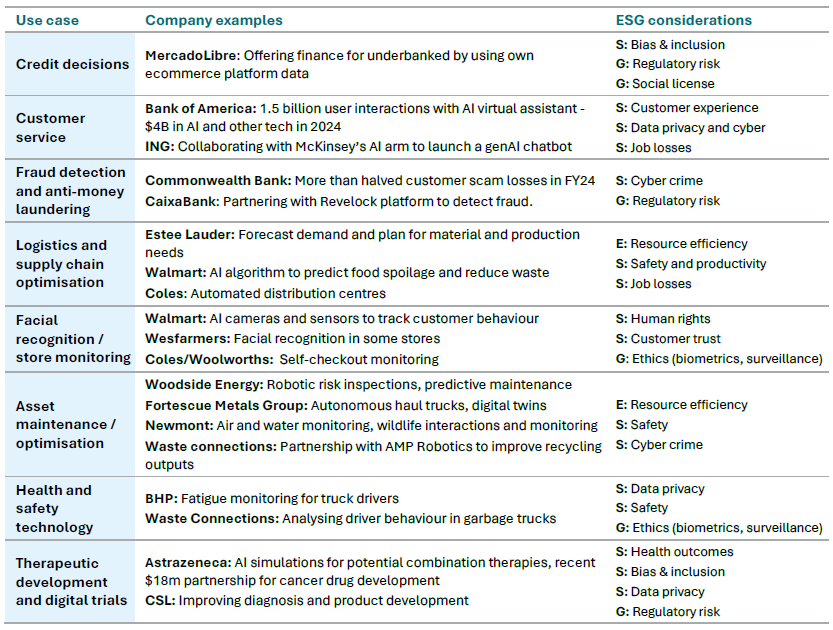

To help us think through these implications, we take a use case first approach. That is, we identify the relevant use cases by sector or company, then assess the relevant ESG considerations including the company specific mitigation efforts. This has supported more proactive and targeted research and engagement with companies and has enabled us to better identify and integrate the various risks and considerations into our ESG assessments.

This approach has been illustrated in the table below. It presents some of the more common use cases, some company examples and the relevant ESG threats and/or opportunities.

40+ company meetings: Continued engagement on responsible AI

Since publishing the framework, our focus has been on assessing responsible AI risks and opportunities within our investments.

Building on the 28 company interviews conducted in 2023 for the research project, our engagement with portfolio companies and prospects has continued. These discussions not only shed light on how AI use cases are evolving, they also help us to assess how responsible AI practices are progressing.

Since publishing the report in May last year, we’ve undertaken a further 15 engagements where insights from our RAI Framework guided the discussions. We shared our framework with organisations such as Wesfarmers, Medibank, AGL, Origin, Aristocrat Leisure, Netflix, Intuitive Surgical, Novonesis, Mercadolibre, Thermo Fisher, CaixaBank and Schneider Electric.

The RAI framework has been a practical way to communicate the types of information investors seek to evaluate AI-related risks. Pleasingly, companies like Medibank and MercadoLibre have said that the resource has been helpful to guide internal responsible AI practices.

Insights and examples from these engagements are categorised into: Financial services, Healthcare, Technology products and platforms, and Industrials and energy services.

Financial services

CaixaBank: AI Investment Guided by Governance Framework

CaixaBank, a prominent Spanish bank, is investing €5 billion in AI to benefit millions of customers. The bank has seen early success with AI in customer service claims, call centre operation, and code generation. There are regulations in the European AI Act that require additional controls and governance mechanisms to ensure the quality of AI outputs in the banking sector. We spoke with the Head of Data Governance to explore the bank’s responsible AI approach, confirming preparedness for AI regulation. The company’s AI Governance Framework ensures oversight of AI applications and adhered to principles like cybersecurity, fairness and reliability.

We recommended that CaixaBank publish a responsible AI policy and disclose more on AI implementation to further enhance its leading approach.

Commonwealth Bank of Australia (CBA): Advanced Technology and Responsible AI Strategy

CBA’s responsible AI strategy is globally recognised, leveraging the company’s advanced technological background and ethical AI programs since 2018. Ranked first in the Evident AI Index for leadership in Responsible AI, CBA collaborates to manage regulatory and reputational risks. The bank introduced a Responsible AI Toolkit in 2024 and completed 15,000 modules on Generative AI and Deep Learning. We view CBA’s approach as leading and are supportive of its ongoing disclosures to shareholders.

In 2023, we provided feedback to CBA that it should consider publishing its Responsible AI Policy. The Bank published this policy later the same year and is presently one of the only Australian companies with a publicly disclosed position.

Healthcare

Medibank: Leveraging AI for customer service and healthcare analytics

In early 2024, we engaged with health insurer Medibank to explore AI opportunities in healthcare and the way in which it considers related implications such as data privacy, bias and customer trust. The company has been using AI to support customer call experiences and to improve healthcare analytics.

Medibank has established an AI Governance Working Group that evaluates each AI use case before implementation, to consider aspects such as customer, reputation and data risks. We are pleased to share that Medibank has adopted our Responsible AI Framework to benchmark its own practices. Medibank is also considering our feedback on publishing a responsible AI policy and disclosure on AI governance implementation.

Intuitive Surgical: Enhancing minimally invasive surgery and patient outcomes with AI

US medical device company Intuitive Surgical is a pioneer in health technology and has moved to improve robotic surgery processes through machine learning and predictive analytics. We engaged with the company to better understand these exciting use cases and explore its responsible AI strategy. For instance, postoperative recommendations have become more effective as they combine surgery indicators, such as blood loss or operating time, with patient outcomes like pain levels and recovery.

Future opportunities point to AI being used within surgery, for example staplers using AI to measure and adjust tissue compression in real-time to help with precision and patient recovery. The company manages cybersecurity and data privacy to high standards, and we suggested that publishing a responsible AI policy that outlines governance – including its management of important risks like bias and quality control – would be useful to investors.

Thermo Fisher: Enhancing healthcare through AI, overseen by a bioethics committee

US healthcare company Thermo Fisher Scientific has been using AI and machine learning for many years to streamline internal operations and improve productivity, especially in clinical trials where AI can support disease detection, drug discovery and diagnostics.

We engaged with the company to learn more about these AI applications and responsible AI considerations. Thermo Fisher highlighted the role of its bioethics committee, which was established in 2019 and has subject matter experts developing a policy commitment, in guiding its responsible AI activities. We provided information on our Responsible AI Framework and encouraged the publication of the policy in line with best practices.

Technology products and platforms

Nvidia: Launched an AI Ethics Committee and customer KYC process Nvidia is a renowned AI enabler which supplies more than 40,000 companies, including 18,000 AI startups. Early in 2025, we had a meeting in which we discussed the balance between sustainability solutions which could be brought by AI, with the energy and water needed to power these tools. Nvidia highlighted that AI provides many exciting solutions like advanced weather modelling for adaptation and resilience, enhanced maintenance practices via digital twins, and automation and route optimisation to lessen carbon emissions in manufacturing and transport. The company is working to disclose these different end-markets, along with energy and water use, which will offer greater insight into Nvidia’s sustainability contributions.

Nvidia also established an AI ethics committee in 2024 to oversee the development of AI with an emphasis on trust and ethics. The committee’s initial focus was to identify new AI use cases and develop a framework to recognise potential risks in product development and customer use. For instance, the committee recommended additional testing and the implementation of guardrails for a specific product, which subsequently increased due diligence requirements for sales to certain customers. These were subsequently adopted by the development and sales teams. We feel this demonstrates a good level of responsible AI integration through the business.

Aristocrat Leisure: Balancing innovation with responsible AI

We conducted a responsible AI assessment utilising our framework and engaged with Aristocrat Leisure to understand the AI use cases across its business. We learned that the more recent generative AI use cases include coding, creative development, marketing and general employee productivity. The company has an AI governance program which includes regular use case reviews by a central AI Working Group. This is a good structural model and the Board receives updates at least semi-annually.

Aristocrat has also engaged external advisors to provide additional guidance on responsible AI. Workforce impacts and employee sentiment are being considered through employee surveys that measure the impact of AI tools. Overall, we observed that Aristocrat is adopting new AI tools, had a good level of workforce adoption and is building a good foundation in responsible AI. We provided feedback that a responsible AI policy would be a good next step.

Industrials and energy services

Schneider Electric: Enhancing AI and industrial automation

Since 2021, French electrical parts company Schneider Electric has expanded AI hubs in India, France, and the US to improve electrification, energy efficiency, and automation. It plans to invest more than $700 million in the US to enable AI growth, domestic manufacturing and energy security, creating 1,000+ new jobs and boosting digital capabilities.

In December 2024, we engaged with the company and discussed AI opportunities and responsible practices. Its AI solutions follow strict governance and ethics standards, managing bias and discrimination through a responsible AI program. A broader AI strategy for 2025-2030 is in development, and we provided our research report as feedback. We also recommended publishing an AI policy to enhance confidence in managing AI risks and opportunities.

AGL: AI in energy networks

AGL Energy has been using AI for some time in various areas, including energy generation, network maintenance and in the electricity retailing part of the business. AGL introduced a relatively recent technology strategy in which AI is one of the four key pillars, and one of the significant use cases discussed was in predictive maintenance. AGL is on the journey to embed responsible AI practices into its operations and are considering suitable governance structures.

Reflections and conclusion

As companies continue to invest in AI, the transformative business impacts are becoming increasingly clear. As described in the company engagement examples above, AI’s potential is evident in areas such as healthcare, industrial automation, energy management, and improving general productivity through processes like coding, customer service and marketing.

From a responsible AI perspective, we have noticed an increase in cross-functional governance structures and policy commitments, as well as a growing awareness of the legal, ethical and ESG risks that come with AI deployment.

In terms of external benchmarking, there has been some progress including the finalisation of the ISO27001 AI Safety Standard, which indicates a trend towards verified AI systems.

Important disclosure metrics related to responsible AI, however, as detailed in the deep-dive component of our framework, are still in early stages. We would like to see metrics such as the number of AI-related incidents, energy usage from applying AI, cost savings from AI, the number and outcomes of AI audits, and the number and types of complaints related to AI. With ‘agentic AI’ now the next frontier, we are aware this will bring another level of complexity to AI decisions.

We feel that responsible AI governance structures, such as those outlined in our framework, can help organisations to harness AI opportunities, while steering away from some of the risks.

Therefore, the three main engagement priorities for portfolio companies are:

- Keep requesting that a responsible AI policy statement is developed and published.

- Develop use-case relevant governance and controls. Best-in-class approaches will have a position on high-risk or sensitive use cases.

- Ensure adequate awareness and alignment with external responsible AI standards, possibly through industry collaboration and peer networks.

Regulatory developments continue to progress (for example, the EU AI Act and the recent AI Action Summit where a joint declaration on inclusive and sustainable AI was signed by 58 countries) but we have observed that no significant or compelling regulations have emerged recently. As such, we continue to monitor resources such as the World Benchmarking Alliance’s Digital Benchmark as well as newer benchmarks like the Evident AI Index, which offer useful insights.

We have also been broadening our research scope to benchmark and evaluate the ESG risks within the AI value chain, including emissions, energy, and water usage in data centres. We hope to be able to share more on this in future.

Download Report